Modern machine learning and AI research have moved rapidly from the lab to our IDEs, with tools like Azure’s Cognitive Services providing API-based access to pretrained models. There are many different approaches to delivering AI services, with one of the more promising methods for working with language being a technique called generative pretraining or GPT, which handles large amounts of text.

OpenAI and Microsoft

The OpenAI research lab pioneered this technique, publishing the initial paper on the topic in 2018. The model it uses has been through several iterations, starting with the unsupervised GPT-2, which used untagged data to mimic humans. Built on top of 40GB of public internet content, GPT-2 required significant training to provide a model with 1.5 billion parameters. It was followed by GPT-3, a much larger model with 175 billion parameters. Exclusively licensed to Microsoft, GPT-3 is the basis for tools like the programming code-focused Codex used by GitHub Copilot and the image-generating DALL-E.

With a model like GPT-3 requiring significant amounts of compute and memory, on the order of thousands of petaflop/s-days, it’s an ideal candidate for cloud-based high-performance computing on specialized supercomputer hardware. Microsoft has built its own Nvidia-based servers for supercomputing on Azure, with its cloud instances appearing on the TOP500 supercomputing list. Azure’s AI servers are built around Nvidia Ampere A12000 Tensor Core GPUs, interconnected via a high-speed InfiniBand network.

Adding OpenAI to Azure

OpenAI’s generative AI tools have been built and trained on the Azure servers. As part of a long-running deal between OpenAI and Microsoft, OpenAI’s tools are being made available as part of Azure, with Azure-specific APIs and integration with Azure’s billing services. After some time in private preview, the Azure OpenAI suite of APIs is now generally available, with support for GPT-3 text generation and the Codex code model. Microsoft has said it will add DALL-E image generation in a future update.

That doesn’t mean that anyone can build an app that uses GPT-3; Microsoft is still gating access to ensure that projects comply with its ethical AI usage policies and are tightly scoped to specific use cases. You also need to be a direct Microsoft customer to get access to Azure OpenAI. Microsoft uses a similar process for access to its Limited Access Cognitive Services, where there’s a possibility of impersonation or privacy violations.

Those policies are likely to remain strict, and some areas, such as health services, will probably require extra protection to meet regulatory requirements. Microsoft’s own experiences with AI language models have taught it a lesson it doesn’t want to repeat. As an added protection, there are content filters on inputs and outputs, with alerts for both Microsoft and developers.

Exploring Azure OpenAI Studio

Once your account has been approved to use Azure OpenAI, you can start to build code that uses its API endpoints. The appropriate Azure resources can be created from the portal, the Azure CLI, or Arm templates. If you’re using the Azure Portal, create a resource that’s allocated to your account and the resource group you intend to use for your app and any associated Azure services and infrastructure. Next, name the resource and select the pricing tier. At the moment, there’s only one pricing option, but this will likely change as Microsoft rolls out new service tiers.

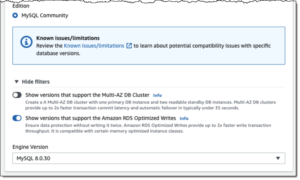

With a resource in place you can now deploy a model using Azure OpenAI Studio. This is where you’ll do most of your work with OpenAI. Currently, you can choose between members of the GPT-3 family of models, including the code-based Codex. Additional models use embeddings, complex semantic information that is optimized for search.

Within each family, there is a set of different models with names that indicate both cost and capability. If you’re using GPT-3, Ada is the lowest cost and least capable and Davinci is the highest. Each model is a superset of the previous one, so as tasks get more complex, you don’t need to change your code, you simply choose a different model. Interestingly, Microsoft recommends starting with the most capable model when designing an OpenAI-powered application, as this lets you tune the underlying model for price and performance when you go into production.

Working with model customization

Although GPT-3’s text completion features have gone viral, in practice your application will need to be much more focused on your specific use case. You don’t want GPT-3 to power a support service that regularly gives irrelevant advice. You must build a custom model using training examples with inputs and desired outputs, which Azure OpenAI calls “completions.” It’s important to have a large set of training data, and Microsoft recommends using several hundred examples. You can include all your prompts and completions in one JSON file to simplify managing your training data.

With a customized model in place, you can use Azure OpenAI Studio to test how GPT-3 will work for your scenario. A basic playground lets you see how the model responds to specific prompts, with a basic console app that lets you type in a prompt and it returns an OpenAI completion. Microsoft describes building a good prompt as “show, don’t tell,” suggesting that prompts need to be as explicit as possible to get the best output. The playground also helps train your model, so if you’re building a classifier, you can provide a list of text and expected outputs before delivering inputs and a trigger to get a response.

One useful feature of the playground is the ability to set an intent and expected behaviors early, so if you’re using OpenAI to power a help desk triage tool, you can set the expectation that the output will be polite and calm, ensuring it won’t mimic an angry user. The same tools can be used with the Codex model, so you can see how it works as a tool for code completion or as a dynamic assistant.

Writing code to work with Azure OpenAI

Once you’re ready to start coding, you can use your deployment’s REST endpoints, either directly or with the OpenAI Python libraries. The latter is probably your quickest route to live code. You’ll need the endpoint URL, an authentication key, and the name of your deployment. Once you have these, set the appropriate environment variables for your code. As always, in production it’s best not to hard-code keys and to use a tool like Azure Key Vault to manage them.

Calling an endpoint is easy enough: Simply use the openai.Completion.create method to get a response, setting the maximum number of tokens needed to contain your prompt and its response. The response object returned by the API contains the text generated by your model, which can be extracted, formatted, and then used by the rest of your code. The basic calls are simple, and there are additional parameters your code can use to manage the response. These control the model’s creativity and how it samples its results. You can use these parameters to ensure responses are straightforward and accurate.

If you’re using another language, use its REST and JSON parsing tools. You can find an API reference in the Azure OpenAI documentation or take advantage of Azure’s GitHub-hosted Swagger specifications to generate API calls and work with the returned data. This approach works well with IDEs like Visual Studio.

Azure OpenAI pricing

One key element of OpenAI models is their token-based pricing model. Tokens in Azure OpenAI aren’t the familiar authentication token; they’re tokenized sections of strings, which are created using an internal statistical model. Open AI provides a tool on its site to show how strings are tokenized to help you understand how your queries are billed. You can expect a token to be roughly four characters of text, though it can be less or more; however, it should end up with 75 words needing about 100 tokens (roughly a paragraph of normal text).

The more complex the model, the higher priced the tokens. Base model Ada comes in at about $0.0004 per 1,000 tokens, and the high-end Davinci is $0.02. If you apply your own tuning, there’s a storage cost, and if you’re using embeddings, costs can be an order of magnitude higher due to increased compute requirements. There are additional costs for fine-tuning models, starting at $20 per compute hour. The Azure website has sample prices, but actual pricing can vary depending on your organization’s account relationship with Microsoft.

Perhaps the most surprising thing about Azure OpenAIclo is how simple it is. As you’re using prebuilt models (with the option of some fine tuning), all you need to do is apply some basic pretraining, understand how prompts generate output, and link the tools to your code, generating text content or code as and when it’s needed.

Copyright © 2023 IDG Communications, Inc.