Snowflake continues to set the standard for data in the cloud by removing the need to perform maintenance tasks on your data platform and giving you the freedom to choose your data model methodology for the cloud. Data Vault supports multi-tenancy and, combined with Snowflake’s Row Access Policy (RAP), you can simplify the authorization access of the Data Vault tables itself and make the process data-driven.

This post is number 7 in our “Data Vault Techniques on Snowflake” series:

- Immutable Store, Virtual End Dates

- Snowsight Dashboards for Data Vault

- Point-in-Time Constructs and Join Trees

- Querying Really Big Satellite Tables

- Streams and Tasks on Views

- Conditional Multi-Table INSERT, and Where to Use It

- Row-Access Policies + Multi-Tenancy

- Hub Locking on Snowflake

- Virtual Warehouses and Charge Back

A reminder of the Data Vault data vault table types:

The tenancy applied to the Data Vault will be used to build our query assistance tables.

Multi-tenancy is the concept of having a single instance of an application or platform serve multiple customers, the “tenants.” Each tenant shares the underlying application, but each tenant’s collateral is isolated and remains invisible to the other tenants. The big cloud service providers (AWS, Azure, and GCP) provide Infrastructure-as-a-Service (IaaS) and Snowflake is one of their tenants. Snowflake itself is a Software-as-a-Service (SaaS) platform deployed in a virtual private cloud (VPC) instance and serves multiple tenants (Snowflake accounts) per deployment across the CSPs.

The concept of multi-tenancy is applicable to a database as well. Salesforce’s SaaS includes a relational database management system (RDBMS) that hosts its customers on a single database but ensures each customer’s data is isolated and remains invisible to other customers.

Within a Snowflake’s SaaS environment, not only are customers enjoying the near-infinite Data Cloud scalability across multiple CSPs using Snowflake Secure Data Sharing, but Snowflake’s ultra-secure, cutting-edge security posture ensures that your Snowflake accounts are isolated and remain invisible to other accounts.

Multi-tenancy is a concept in Data Vault, too—Data Vault can be configured to host multiple tenants of a shared enterprise data model, while keeping the table content invisible to other tenants as well. In this blog post we will discuss just how to do that, and combine Data Vault multi-tenancy with Snowflake technology.

Snowflake deployments as of mid-2022.

What Data Vault means to a business

Data Vault is often compared to other data modeling methodologies such as third normal form or Kimball dimensional marts. Each method has a focus, and while dimensional modeling focuses on information delivery, it becomes cumbersome to maintain when changes inevitably need to be applied. Data Vault focuses on how to maintain auditability, and remain agile while tracking the two most important information mapping concepts of a business:

- Object state

- Object relationships

We don’t need to investigate third normal form because the creator himself, Bill Inmon, has endorsed Data Vault and updated his definition of a data warehouse accordingly:

A data warehouse is a sibject oriented, integrated (by business key), time-variant and non-volatile collection of data in support of management’s decision-making process, and/or in support of auditability as a system-of-record.”

Bill Inmon, at World Wide Data Vault Consortium 2019

No matter what business process, lean value stream, or domain you are mapping, what a business does is alwaysbased on a business object. We want to know its state (and perhaps what was its state) and how to derive value from that object by combining it with other business objects to form a unit of work, akaatransaction or relationship.

All software applications and systems are purchased to automate business processes, and it is the scalable and flexible nature of Data Vault that allows us to capture the business process outcomes into hub, link, and satellite tables. Every variation of these table types should be qualified through business process understanding, data profiling, and technical debt recognized and budgeted to be dealt with in a product backlog. For every tactical solution there must be a card created to track, assign, and deal with technical debt.

Passive integration

Data Vault being a top-down data modeling approach, how do we then passively integrate the source systems to our enterprise model? Recognize that COTS (commercial off-the-shelf) software solutions will have multi-tenants of their own, and will likely have an industry-specific, but otherwise generic, data model to serve as many of their customers as possible. What the vendor labels as an account may not be how your enterprise defines an account. Your challenge is to integrate these business rule engines into a Data Vault using recognizable hub table naming standards based on the defined business architecture.

Defined at your enterprise level is your information map that anyone in your business willunderstand as the true interpretation and meaning of common business objects. With that in mind, let’s briefly define some important concepts of what this means for your Data Vault.

1. Recognizing that multiple source systems having their own definitions will map their business keys of a business object to a common hub table.

Once a business architect defines the business object, they can integrate multiple source systems accordingly. For those source systems where they could have a clash, they’d introduce a persistent salt for that business key and call it the business key collision code (BKCC). This method of separating business objects is used very sparingly. The ideal scenario is that the same business key representing the same business object is used across source systems; however, we recognize that in operational reality this could be a challenge.

2. Recognizing that you may have multiple tenants to the same data model, but not necessarily being able to share its content while still using the same definitions of what a business object is, according to your enterprise.

To reuse the same Data Vault model is to reuse the same hub tables without exposing the business object state and relationships to unauthorized business users.

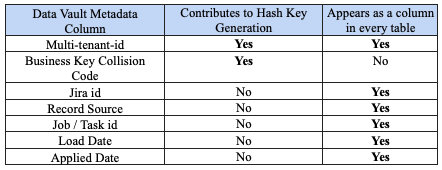

3. What other metadata columns couldwe add to a record to give us the robust data provenance we seek? Here are some suggestions:

- Job or Task id – Link this record to whichever task or job inserted that record

- Jira id – What initiative led to this record being inserted into the enterprise Data Vault model? The Jira id is linked to a document that in essence is a mandatory requirement according to DevOps best practices.

- Record source – Where the record came from, but also a space to include the business rule name and version. Any change to the business rule version could trigger a change record in a satellite table.

- Applied date timestamp – The snapshot date of the state of the business object at the time the business rule outcome was captured.

- Load date timestamp – The timestamp of when the record is loaded to Data Vault—in essence, a version timestamp.

To summarize:

- Multi-tenant-id || BKCC || business key(s) = surrogate hash key

- For every Data Vault table, we include a column to identify the record tenant.

- Because the tenant id and BKCC are a part of generating the hash key, using SQL equi-joins only ever join related content by the hash key digest.

If you are not sure what to set the tenant ID to, or if you are unsure if you need one (and this is applicable to BKCC as well), set these IDs to “default.”

Multi-tenant design

We have defined that multi-tenancy is builtinto the surrogate hash key and that these are used to join hub, link, and satellite tables via SQL equi-join conditions. We also need to filter these table types by the tenant itself. Because the hub table contains the business key and the appropriate tenant id, it is the only place where the plain-text business key is required.

Therefore, every other Data Vault table is only contextually applicable to the business key if we can join to it by the generated surrogate hash key.

- A satellite table has no business key and requires an equi-join to the hub table to find what that business key is.

- A link table has no business key and requires an equi-join to the hub table(s) to find what the business key is.

Now let’s design the components needed for multi-tenancy of Data Vault on Snowflake.

Multi-tenant, passive integration, multi-source with RAPs applied for Role-Based Access Controls (RBAC) authorized access.

Step 1: Who are the tenants?

Like identifying the source systems for your Data Vault, you need to standardize the tenants of your enterprise Data Vault model via codes. This list will be what we will define as our policy entitlements table.

Note: These are made-up examples—your organization requirements may differ.

Note: These are made-up examples—your organization requirements may differ.

Remember, to qualify a multi-tenant is to use the same hub table structure, but to ensure that the tenant’s material content is easily discernible.

Step 2: Design Role-Based Access Controls (RBAC)

Snowflake RBAC will be used in combination with the entitlements table, ensuring a robust and centralized comprehension of who the tenants are. Although access to the data objects could be shared, using the correct Snowflake context ensures that only the authorized roles can see the data they are entitled to. For this design we will use Snowflake’s current_role() function. (Here is the full list of context functions.)

Functional role automation can be managed externally via your single sign-on (SSO) provider, and groups managed via your system for cross-domain identity management (SCIM). These roles will map 1:1 to Snowflake and we can define that access by extending the entitlements table with the role names.

Important: Be sure to keep these role names uppercased!

Oversimplified to illustrate the concept only—your requirements may differ.

Oversimplified to illustrate the concept only—your requirements may differ.

The roles above are linked to access roles that grant privileges to access the Data Vault assets themselves. Because the content is being used to provide analytics, the associated business analyst should only have SELECT access privileges on information marts. The builder role will need SELECT access privilege over Data Vault and be able to CREATE TABLEs in our query assistance schema and CREATE VIEWs in the information mart schema. How you choose to manage and segregate your zones may differ, of course, but this example is designed to illustrate how to use RBAC and RAP for your Data Vault.

Simple RBAC

Step 3: Define RAP

The entitlements table serves as the base for the data-driven RAPs needed to control the access to your data. Combined with the authorized role, the RAP will only allow row-level selection of the content that RBAC is entitled to. For the common hub, the authorized role can only see the business keys it is entitled to. The same is true of link and satellite tables but these are generally single source tables.

create row access policy datavault_tenants as (dv_tenantid varchar) returns boolean -> ‘SYSADMIN’ = current_role() or exists ( select 1 from utilities.datavault_tenants where role = current_role() and tenant_code = dv_tenantid )

When querying or using a data object affected by RAP, Snowflake will evaluate access at query time and use a dynamic secure view over that affected table. This means that every time you use the table, the internal view itself is executed.

RAP is applied by using the TRS-BUILDER functional role.

The diagram above is a pretty simple design, but this can be expanded to more advanced RAP topics, such as layering of access based on the view over the Data Vault. Still based on functional roles, this expands the row-level access to sub-categories of roles and perhaps to aggregated views of the underlying content to obfuscate where necessary. Keep in mind that the more layers you add, the more context Snowflake needs to execute at query time, so keep these as flat as possible in your design.

Let’s see what happens when we use the same builder role to build a PIT over a hub that does not have treasury data. In other words, when TRS BUILDER is not entitled to that content.

RAP prevents unauthorized access to the underlying data.

With RAP applied to the hub_account table and its adjacent satellite tables, the PIT table that gets created retrieves no records from those tables. Essentially, we have applied a pseudo-filter without explicitly defining it in our PIT table construction—cool!

TRS ANALYST only has SELECT privileges to the information mart view because it’s running the SELECT statement on the VIEW (and not the underlying data). Relatedly, running a SELECT statement will produce data that that assigned role is authorized for, so the assigned role will not need explicit SELECT privileges on the underlying Data Vault tables (hubs, links, and satellites). Another person, such as a finance role in the diagram below (FIN ANALYST) who is not authorized to see the content will not return any records. This role does not appear in our entitlements table.

The same mart will show/hide results depending on the role.

Access beyond a single Snowflake account

Additional RAPs can be defined if you need to share centralized content beyond your Snowflake account. That is, to share your data to other Snowflake accounts, you may consider using Snowflake collaboration in either of the following configurations:

- Privacy-preserving collaboration: Account-to-account or account-to-multiple-accounts private, secure sharing of data, data services, and applications (applications are currently in private preview) powered by Snowgrid, Snowflake’s cross-cloud technology layer that interconnects your business’ ecosystems across regions and clouds so you can operate at global scale. When sharing the data itself is not an option, specified accounts can be granted the ability to analyze the data without actually exposing it.

- Share with companies not yet on Snowflake: If the company you are looking to share data with is not yet on Snowflake, you can provision managed accounts that are either read-only or full read-write accounts.

- Snowflake Marketplace: Built on Snowflake Secure Data Sharing, Snowflake Marketplace allows consumers to discover, evaluate, and purchase third-party data and data services, and allows providers to market their own products across the Data Cloud. You can access the most current data sets available and receive automatic real-time updates directly in your Snowflake account to query without transformation and combine with your own data.

Listings shared either privately or publicly via Snowflake Marketplacecan be used with automated cross-cloud auto-fulfillment (currently in public preview) and monetization with business partners (internal or external to your organization). Whether a provider is sharing privately or publicly, consumers can find the shared content in the Snowflake user interface. Publicly sharing data on Snowflake Marketplace also allows providers to advertise their listings to the Snowflake community. Shared data is made available in listings that include metadata such as business needs, usage scenarios, and sample SQL queries to easily draw value from the shared content.

Keep in mind, however, that any table or view in Snowflake can support up to one row access policy at a time. That means if you need to share data that has an existing RAP, you will need to make that content available in a secure view to be included in an outbound share, or clone the content and remove the existing RAP to apply the account-level RAP.

Snowflake gives you the tools to simplify your design and secure your data. Here we did not need to deploy views for every business user or use case. Instead, by defining a policy once and applying it to the underlying tables, Snowflake’s RBAC takes care of filtering out that data for you.

Until next time!